Beyond Tools: Exploring Human-Machine Relationships through the metaphor of 'Care'

How might the metaphor of ‘caring for’ rather than ‘using’ technology expand the design space for interactive systems? This question drove our recent workshop “Caring for Machines” at HfG Schwäbisch Gmünd, where we explored new ways of engaging with technological systems during our lab week.

Advances in sensor technology and artificial intelligence are gradually reshaping how we interact with technological systems. As these systems evolve from functional tools to more responsive counterparts, our relationship with technology moves beyond the traditional “technology as servant” metaphor. Contemporary technology design typically ranges between assisting technology that serves us as obedient tools, and demanding technology that competes for our attention through gamification and addictive interfaces. But what possibilities exist in the space between these poles? How does our emotional attachment to technologies change how we relate to them—and to ourselves? The workshop employed “caring for a machine” as a conceptual lens for reimagining human-machine interactions. We proposed the metaphor of “care,” characterized by reciprocal relationships of giving and taking, as a means of exploring the possibilities that arise in the space between assistance and demand. Through a combination of conceptual exploration and hands-on engagement, we provided students with insight into sensor-based machine learning and encouraged them to experiment with creative and unconventional ways of interacting with technological systems. Working with simple and accessible tools, students trained and implemented their own machine learning models, turning abstract ideas into tangible, interactive prototypes. The four resulting projects embody diverse aspects of care, interaction, and technological companionship.

The following sections showcase the students’ final projects and outline the workshop methods and process that guided their development.

Final Projects

Social Spark

Philipp Maginot, Sebastian Weinert

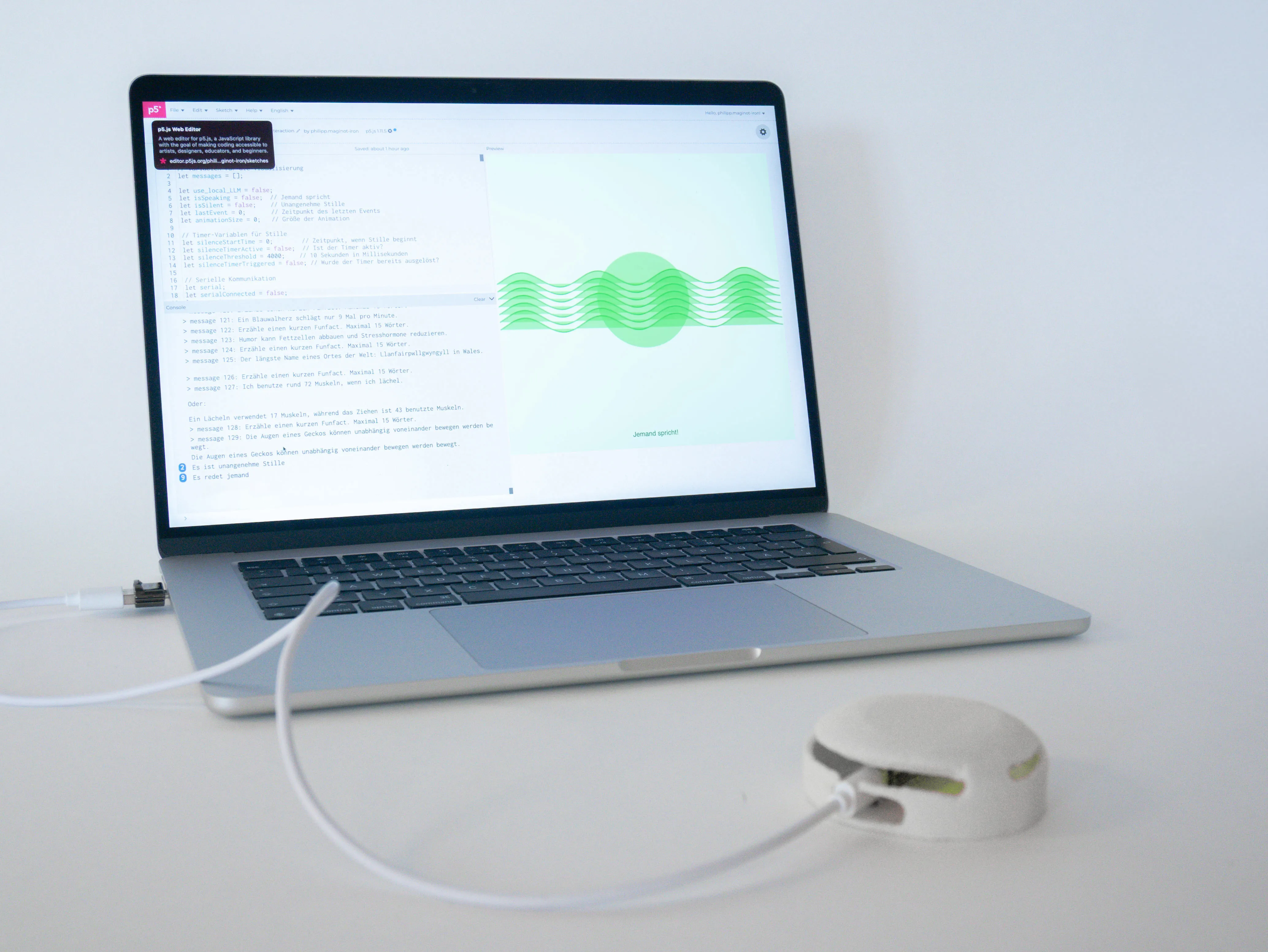

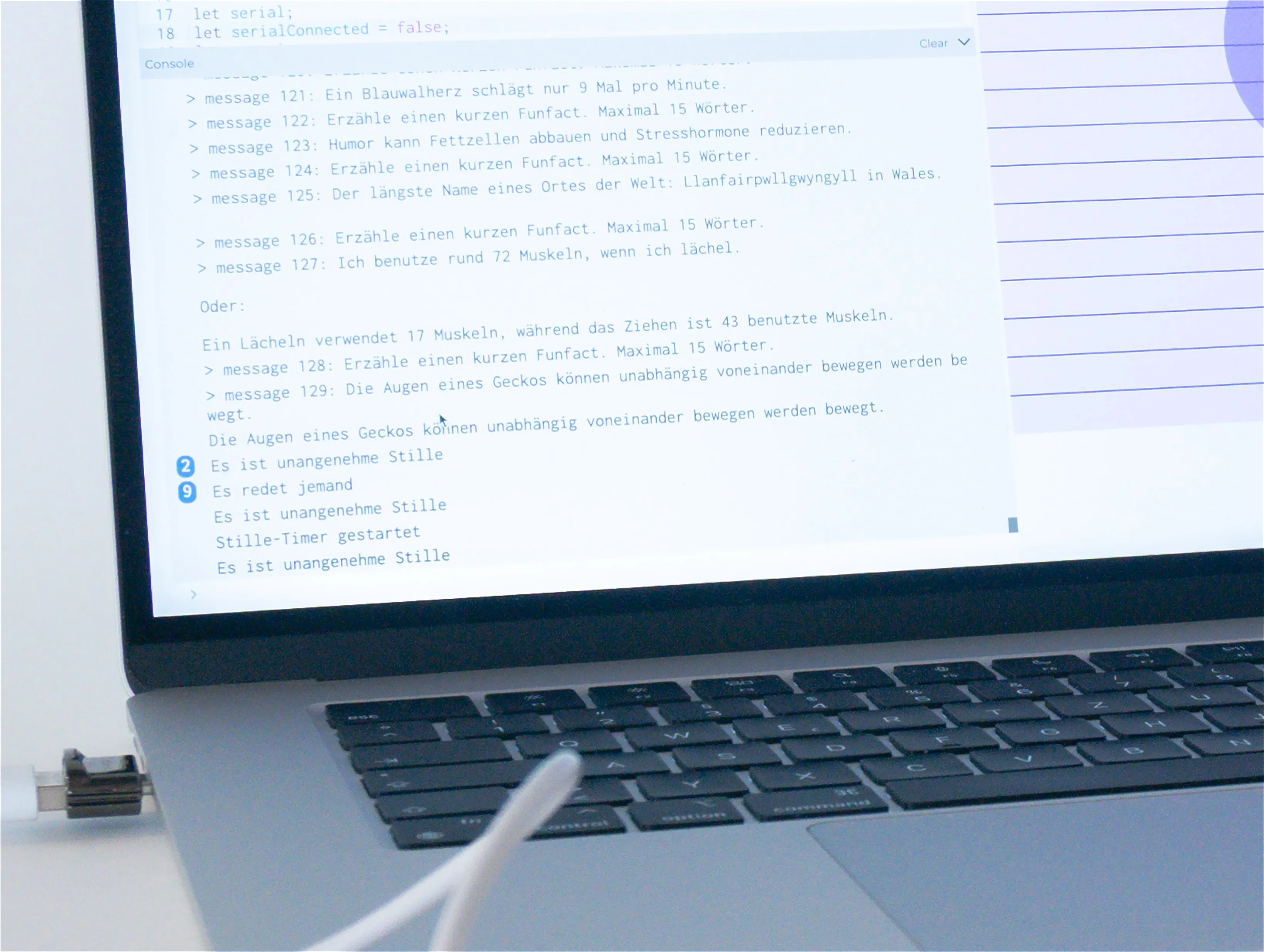

Social Spark tackles one of the most human challenges: awkward silences in conversation. This interactive table gadget detects conversational lulls and responds with well-timed jokes or interesting facts designed to catalyze social engagement. This prototype explores the potential role of technology in fostering social dynamics through ambient interventions.

Nature Pulse

Yingxun Li

Nature Pulse is an ambient companion that “feeds” on natural sounds throughout the day. The system processes natural audio signatures—bird calls, water sounds, wind patterns—translating these into chromatic responses that accumulate throughout the day. By evening, the accumulated light patterns serve as both functional illumination and reflective documentation of daily environmental encounters. The prototype explores how technology can create awareness of our connections with nature.

DanceBox

Carolin Schröpel, Taha Celik

DanceBox creates a dynamic dialogue between movement and music. Starting with a steady beat, this Arduino-powered device uses machine learning to recognize three distinct dance movements, each adding layers to the rhythm. The result is an evolving musical collaboration where the box becomes a responsive dance partner. This prototype promotes embodied interaction by exploring how machine learning enables the fluid and improvised collaboration of humans and machines.

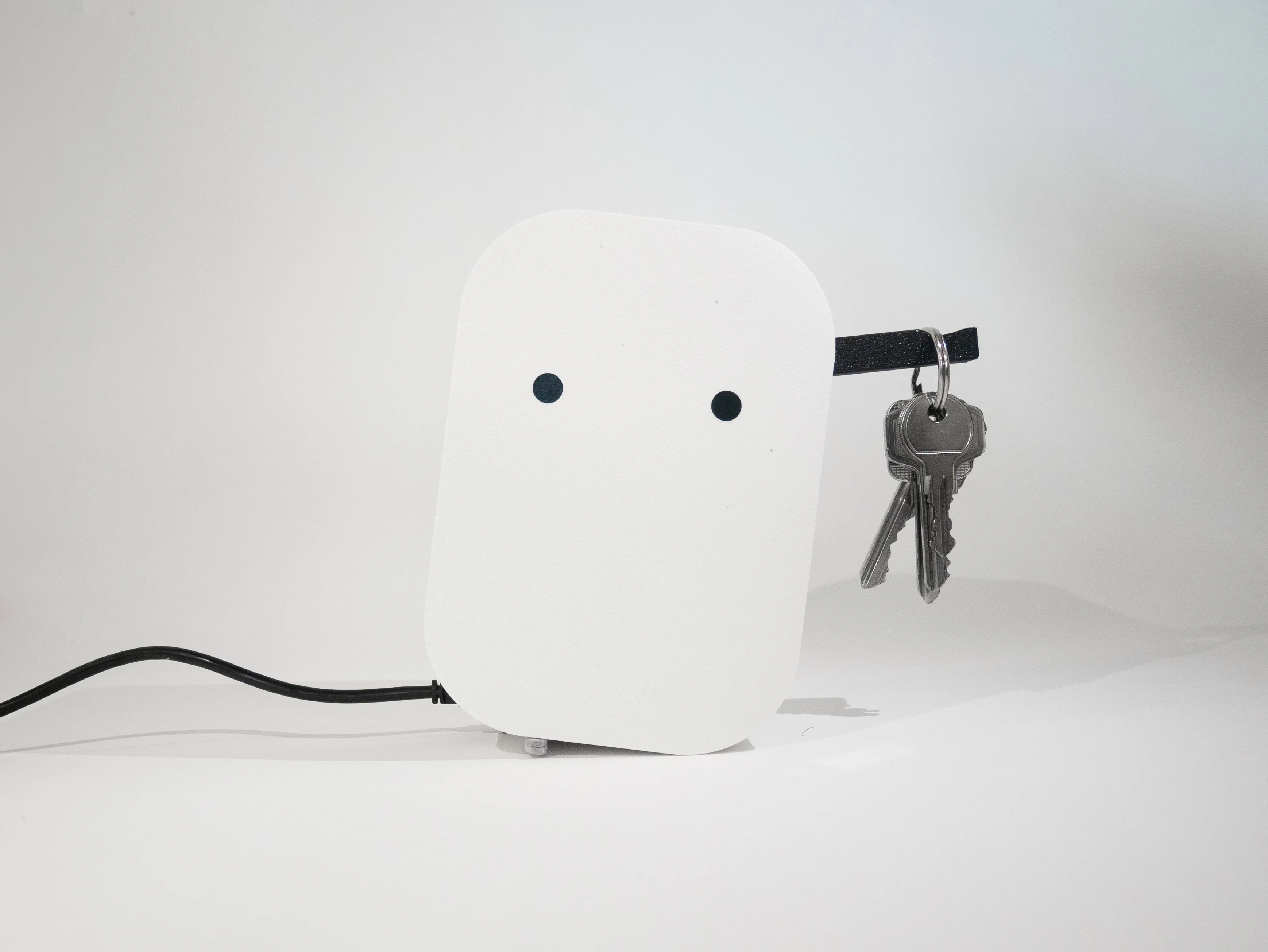

Security Night Guard

Arthur Tomasi, Felix Schönherr

Security Night Guard investigates care through ritualized interaction with technology. Each evening, you hand over your keys to this vigilant companion, which watches over them through the night. In the morning, the “tired” guard releases the keys, creating a gentle wake-up call that signals the changing of the guard from machine to human. The prototype demonstrates a relationship with technology based on trust, responsibility, and mutual dependence rather than on mere utility.

The workshop illustrated the potential of “care” for designing alternative human-machine interactions. By combining conceptual exploration with technical implementation, participants were able to transform abstract concepts into functional, experiential prototypes. These prototypes point toward technological futures in which systems participate in care networks rather than merely serving human needs. They suggest a shift from instrumental technology relationships to more nuanced, emotionally engaged human-machine collaborations, fostering profound reflections on how we design and live with technology. The developed prototypes reveal interesting patterns that suggest alternative directions for understanding human-machine relationships. These patterns should be explored further in future courses or research. Reciprocal Agency emerges as a central theme. Each prototype establishes a bidirectional relationship in which humans and machines both meaningfully contribute to the interactive experience. Rather than responding to simple commands, these systems engage in an evolving, ongoing relational dynamic. Temporal Accumulation appears across multiple prototypes. Through sustained engagement, interactions with objects become more significant. This suggests that meaningful interactions are more likely to result from long-term engagement than from isolated transactions or single applications. Most prototypes are characterized by Environmental Integration, in which subtle, context-sensitive responses are favored over explicit interface interactions. Accordingly, care-oriented technologies may benefit from environmental integration rather than requiring targeted attention. Several prototypes integrate into everyday routines and practices. This type of Ritual Embedding suggests that care interactions may evolve from habitual rather than exceptional interactions and become interwoven into daily life.

Workshop Methods and Schedule

The workshop combined conceptual exploration and hands-on experimentation in a three-day format. On the first day, students were asked to bring an object of personal significance. Discussing these objects established a tangible foundation for understanding care relationships beyond human contexts. For further conceptual engagement, we used custom ideation cards that generated prompts in the format of “Caring for [an object] to care for [a purpose]“. Combinations like “caring for a plant to care for your mental health” deliberately created an irritating yet inspiring starting point for short warm-up concept sprints. Students further engaged with foundational AI concepts through hands-on experimentation using Edge Impulse, training custom machine learning models to recognize gestures and movements. A second card-based tool paired sensors with actuators, facilitating systematic exploration of input-output modalities while maintaining connection to care-based concepts.

On the second day of the workshop, students worked on their final concepts based on care interactions. The implementation was initiated using the online machine learning service Edge Impulse. They trained a custom model to classify specific movements, gestures, or sensor patterns based on microphone or accelerometer data. The model was deployed on an Arduino Nano BLE Sense microcontroller, and the classifications were sent to the computer via emulated keyboard inputs. To support the technical experiments, the students were provided with boilerplate code for input and output modalities. As output, p5.js code was provided to either create custom interactions and visual outputs or to use a large language model (LLM) or text-to-speech model to generate text or speech outputs.

On the third day, the prototypes (presented above) were finalized and prepared for an exhibition.