White-boxing AI text comprehension to augment human text comprehension

PhD Progress Update:

Based on LLMs’ internal metrics, we can calculate and visualize the reciprocal contextualizing and contextual integration of words, potentially increasing text comprehensibility.

Introduction:

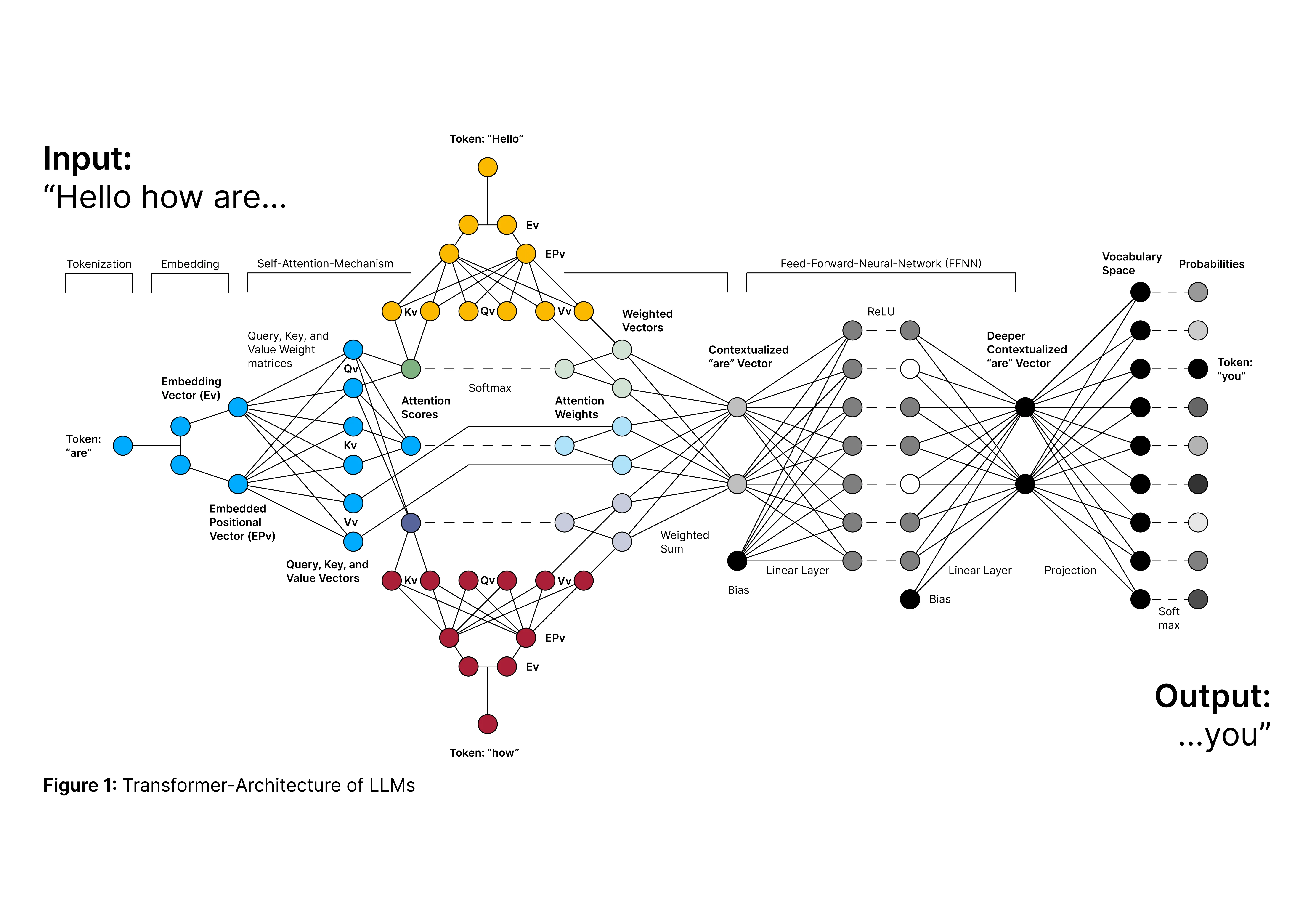

To predict the word “you” after the text “Hello how are”, transformer-based Large Language Models (LLMs) process the text in several steps (Figure 1).

- The text gets split into words or sub-words, and their meaning is represented through the position in a vector space (Tokenization and Embedding)

- The meaning of the previous words gets added to the vector representation of the word “are” to contextualize it (Self-Attention-Mechanism)

- The vector representation of the word “are” is further transformed (FFNN)

- The word whose vector representation is most aligned with the contextualized “are” vector representation is chosen (in this case, “you”) [1]

Even though these LLMs are called “Black Boxes”, we can retrieve and interpret the Attention Scores of words to calculate and visualize the reciprocal contextualizing and the contextual integration of words.

Methods:

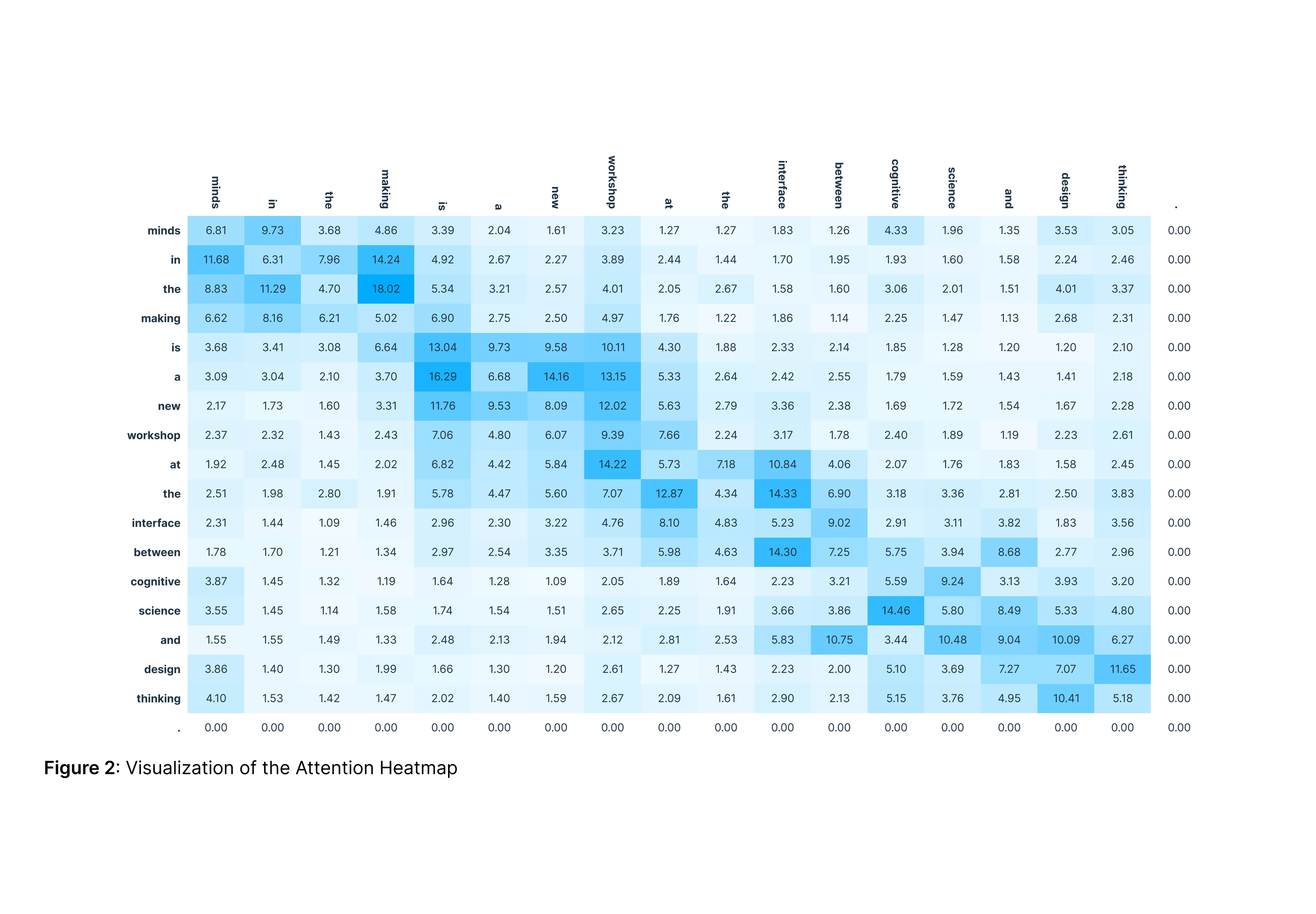

The Attention Scores of the words in a text determine how important words are contextually for the meaning of a specific word in the text. In other words, how much their meaning should be added to the specific word to contextualize it.

The Attention Heatmap (Figure 2) shows us how much the column word attends to the row word, so how much of the column word’s meaning is added to the row word’s meaning to contextualize it.

- If we sum up the row attention scores, we get the total received meaning

- When summing up the column attention, we get the total provided meaning

This enables us to calculate the normalized sum of the total provided and total received meaning, which represents the contextual integration of a word.

Visualization:

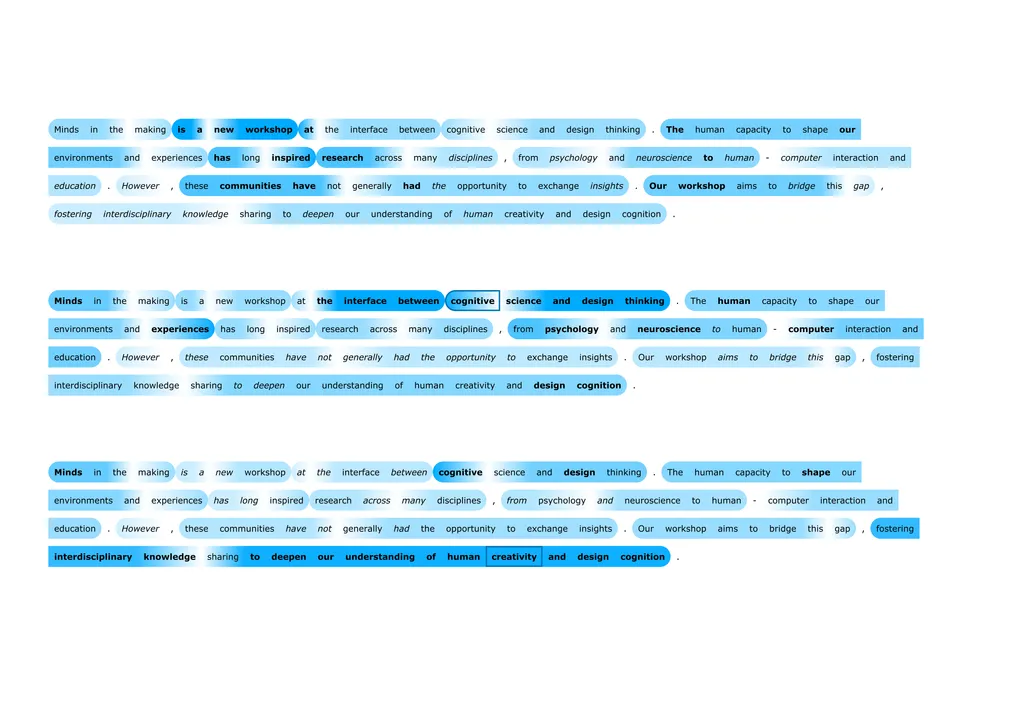

By visualizing the contextual integration of words through font (high = bold, middle = regular, and low = italic) and color (from high = blue to low = white), we enable the reader to directly see the core of the text, as well as words that might need further contextualization if they are not known to them.

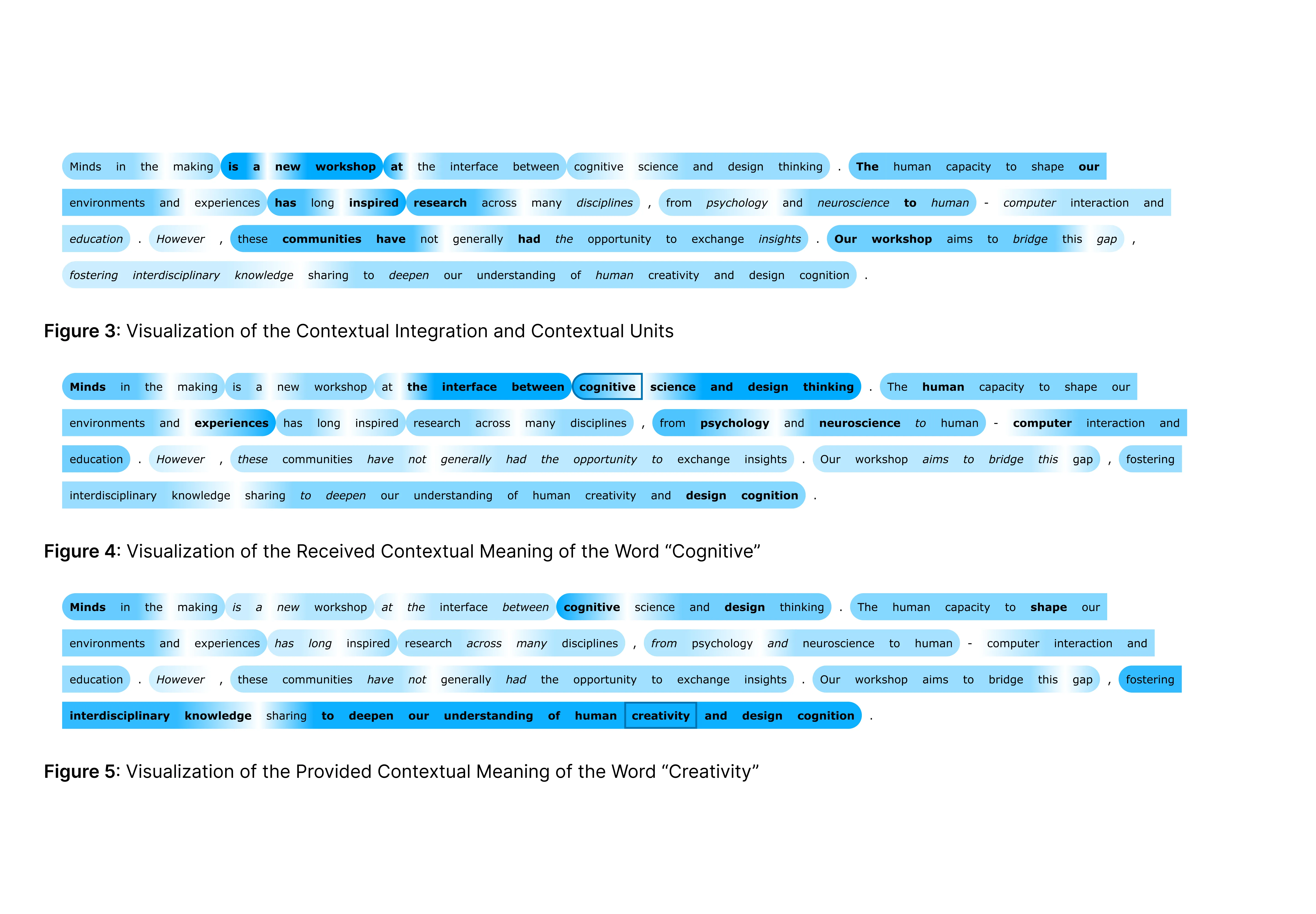

By visualizing whether a word receives most of its contextual meaning from the left or right (white to blue gradient), contextual units emerge that could help the reader anticipate and understand the context (Figure 3).

- To see where exactly a word receives its contextual meaning from, the reader can select it and understand its contextual integration (Figure 4)

- The same is possible to see where exactly a word provides its meaning to (Figure 5), enabling the reader to understand the reciprocal contextualizing

Outlook:

The visualization of the reciprocal contextualizing and contextual integration of words in a text could give readers a visualized contextual understanding of the text that was hidden before in our very own “Black Box”, the human brain. This work explores how “white-boxing” AI can not only support its explainability and potential to simulate human text comprehension, but also be used to augment and empower humans.

The corresponding poster was presented at the Minds in the Making Workshop Workshop at the CogSci Conference 2025