Predicting Comprehensibility in Scientific Text Based on Word Facilitation

PhD Progress Update:

Towards making every scientific text comprehensible for anyone.

Motivation:

Despite the growth of open and accessible science [Klebel et al., 2025], levels of scientific literacy are declining [OECD, 2023]. Closing this gap requires writing that communicates science in ways that remain comprehensible across disciplines and to broader audiences. A key step toward this goal is the ability to predict scientific text comprehension.

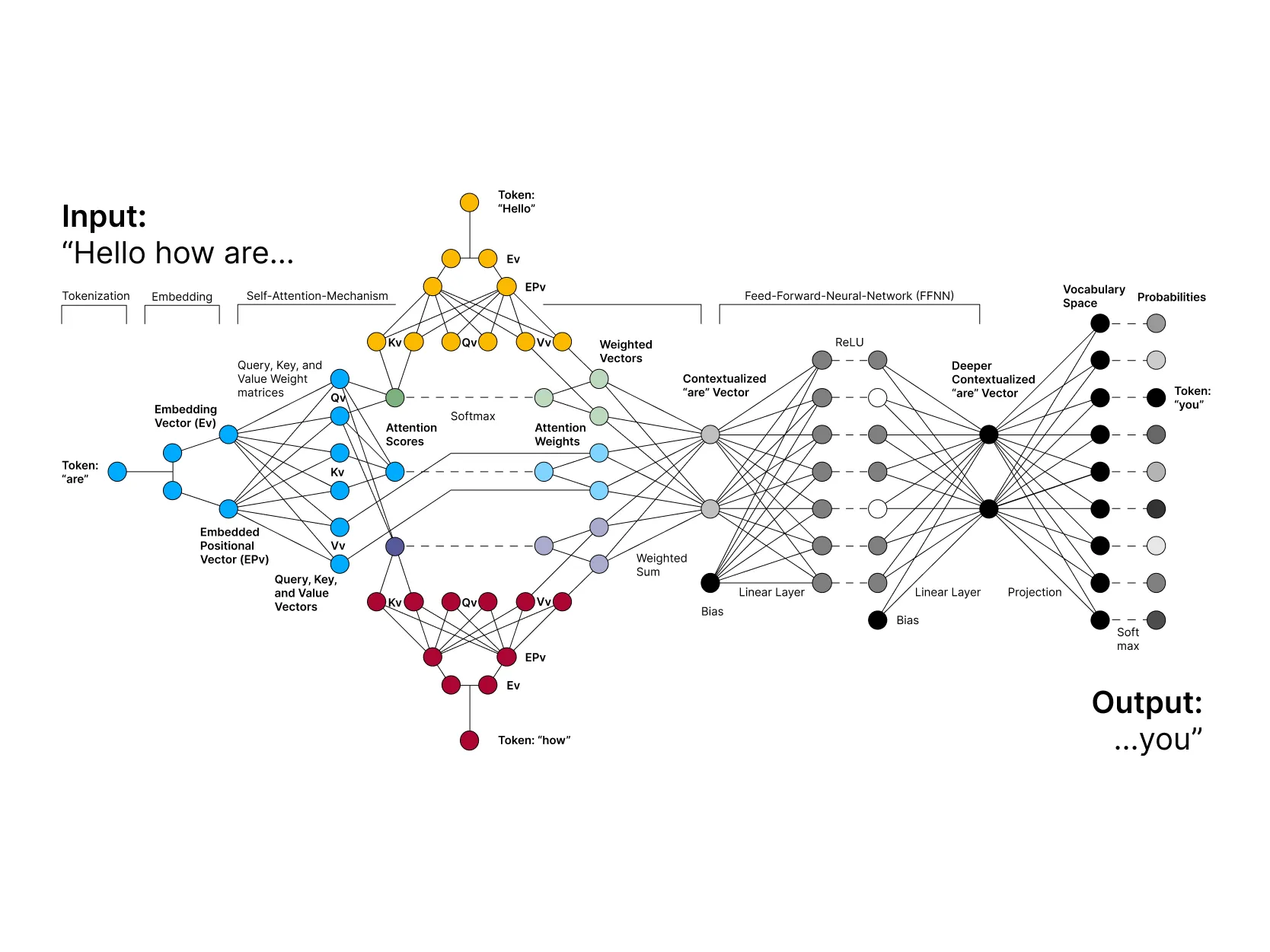

In this work, we derive a novel metric for predicting scientific text comprehension based on the attention mechanism in transformer architectures (Figure 1). Attention scores quantify how strongly each word facilitates the processing of other words in context [Vaswani et al., 2017]. Here, we evaluate word facilitation based on attention scores as a metric of text comprehensibility.

- Figure 1: Abstract transformer architecture of a large language model.

Methods:

We compared the explanatory power of word facilitation (normalized and computed using inverse entropy and directional weighting) against that of traditional linguistic metrics for text comprehensibility, such as length, frequency (SUBTLEX-US corpus [Brysbaert et al., 2012]), surprisal (negative log probability from GPT2), and predictability (human rating on a 1–5 scale) using the dataset from [de Varda et al., 2024]. This dataset contains reading times and neural activations reflecting text processing, recorded for each word in 205 English sentences.

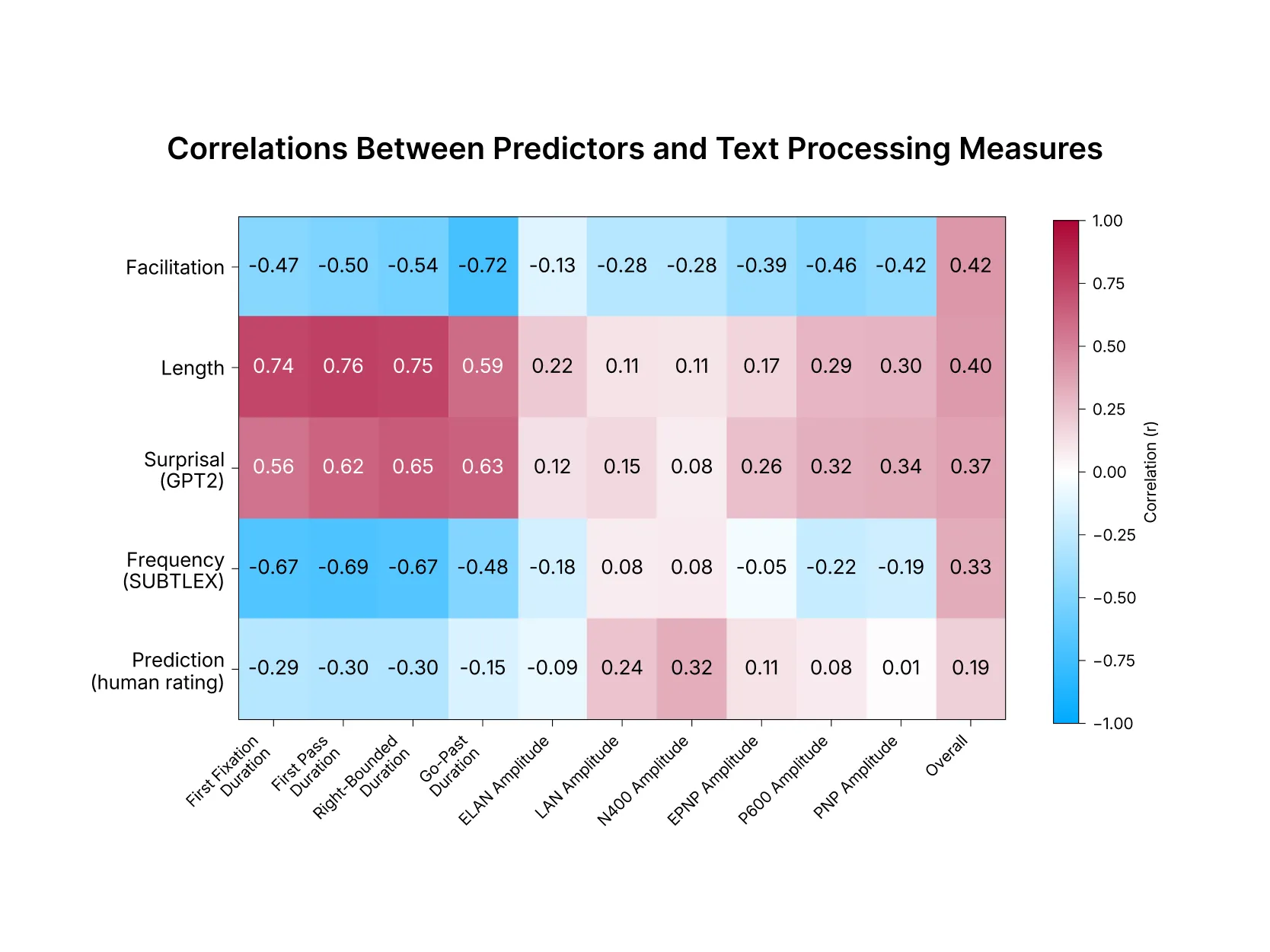

- Figure 2: Pearson correlations (r) between predictors and both reading times and neural activations reflecting text processing. Word facilitation shows the strongest overall correlation (mean |r| across measures).

Results:

Word facilitation demonstrates the strongest overall correlation with text processing measures, outperforming traditional predictors (length, frequency, surprisal, and predictability) across both reading times and neural activations (Figure 2).

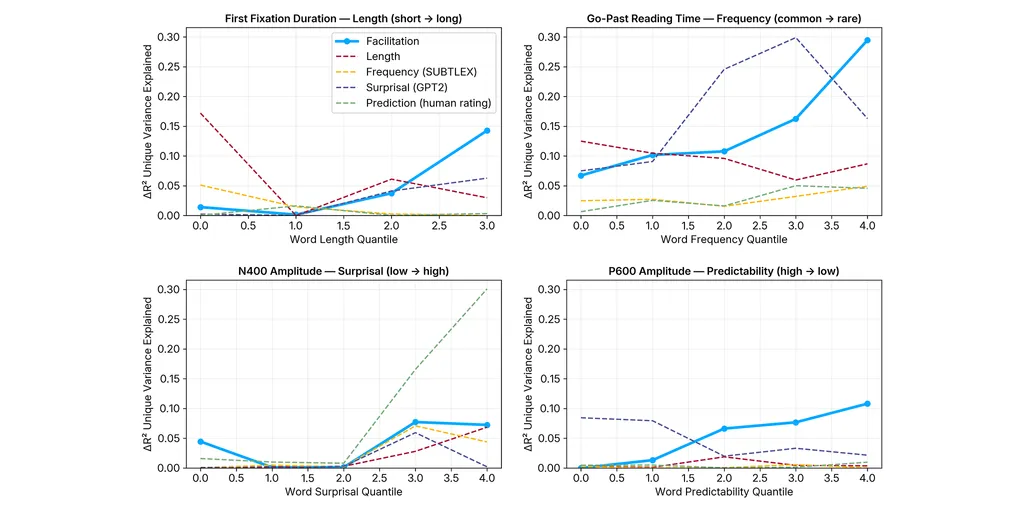

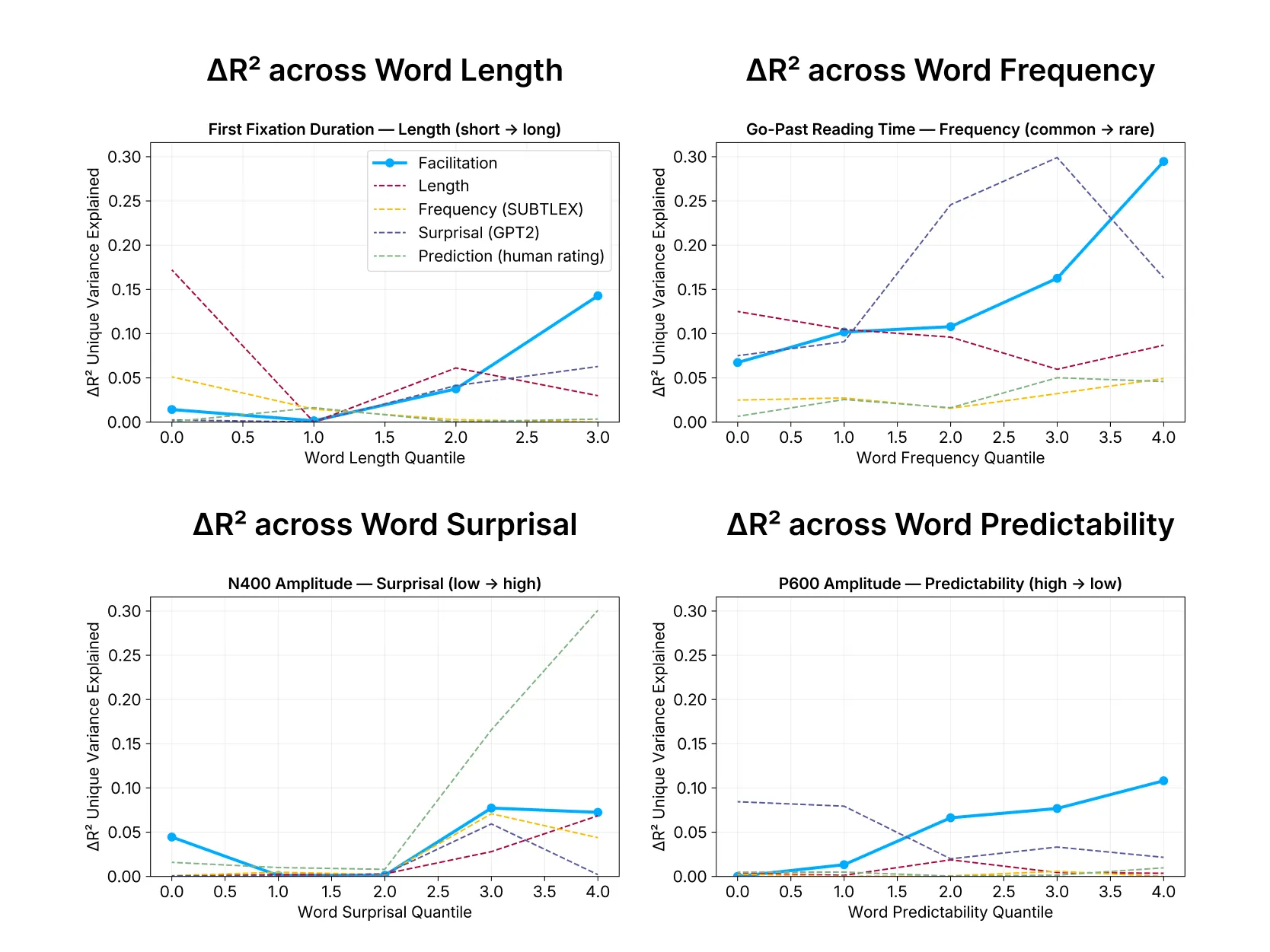

Word facilitation especially explains unique variance for long, less frequent, and less predictable words. It even explained the most unique variance for first fixation reading times in quantiles with the longest words. A measure that reflects initial lexical access [Demberg and Keller, 2008] and is normally dominated by length and frequency (Figure 3).

- Figure 3: The unique variance (∆R²) explained by a predictor for reading times (first fixation duration and go-past reading time) and neural activations (N400 and P600 amplitude) reflecting integration difficulty. Unique variance is shown across word property quantiles.

Conclusion:

We introduced an attention-based metric of text comprehensibility, based on how strongly each word facilitates the processing of other words in context within a transformer model.

Word facilitation complements the traditional linguistic metrics used to predict text comprehensibility by explaining late reading times and neural activations reflecting the integration of a word into its broader sentence context. It especially explained unique variance for words that the existing linguistic metrics misclassify as difficult, highlighting its sensitivity to context and its suitability for scientific texts rich in long, infrequent, and less predictable yet contextually comprehensible words.

Ressources

- M. Brysbaert, B. New, and E. Keuleers. Adding part-of-speech information to the subtlex-us word frequencies. Behavior research methods, 44(4):991–997, 2012.

- A. G. de Varda, M. Marelli, and S. Amenta. Cloze probability, predictability ratings, and computational estimates for 205 english sentences, aligned with existing eeg and reading time data. Behavior Research Methods, 56(5):5190–5213, 2024.

- V. Demberg and F. Keller. Data from eye-tracking corpora as evidence for theories of syntactic processing complexity. Cognition, 109(2):193–210, 2008.

- T. Klebel, V. Traag, I. Grypari, L. Stoy, and T. Ross-Hellauer. The academic impact of open science: a scoping review. Royal Society Open Science, 12(3): 241248, 2025.

- OECD. PISA 2022 Results (Volume I) The State of Learning and Equity in Education: The State of Learning and Equity in Education. OECD Publishing, 2023. ISBN 9789264351288.

- A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.S. Wiegreffe and Y. Pinter. Attention is not not explanation. arXiv preprint arXiv:1908.04626, 2019.

The corresponding poster was presented at the AI in Science Summit 2025.