Building AI Intuition: Four Pillars for Teaching AI in Design Education

How do we prepare design students to work meaningfully with AI technologies? Over the past three years, we’ve developed and tested a comprehensive approach grounded in four educational pillars.

This article summarizes “Building AI Intuition – Four Educational Pillars for Teaching AI in Design at AI+D Lab” [1], published in the third volume of the KITeGG un/learn AI series. The full text offers detailed descriptions of teaching formats, tools, and insights from three years of practice. Read the complete publication at unlearn.gestaltung.ai or access our article directly via the “Read the paper”-button.

The Challenge

As AI increasingly becomes part of the design landscape, a critical question emerges: how do we prepare students to engage with AI thoughtfully, critically, and creatively? The challenge goes beyond technical training. Students need to understand when to leverage AI and when to rely on their human intuition. They need to assess AI’s capabilities and limitations, develop precise language to communicate about these systems, and critically evaluate their societal, ethical, and environmental impacts.

In 2023, we outlined our ambition to provide students with what we call AI Intuition—a broad understanding of AI’s working principles and constraints that enables meaningful engagement at multiple levels [2]. This article synthesizes three years of experimentation, iteration, and learning at the AI+Design Lab, presenting our approach grounded in four complementary pillars.

Four Pillars for AI Intuition

Our approach rests on four interconnected pillars, each contributing to developing AI Intuition:

1. Improving Technical Literacy

Understanding the foundational principles of AI is essential for practical engagement at the intersection of AI and design. However, technical literacy doesn’t require turning designers into machine learning engineers. Instead, we focus on developing an intuition for what AI can and cannot do, covering the foundational principles of neural networks, the specifics of sensor-based AI, image generation models, and large language model architectures.

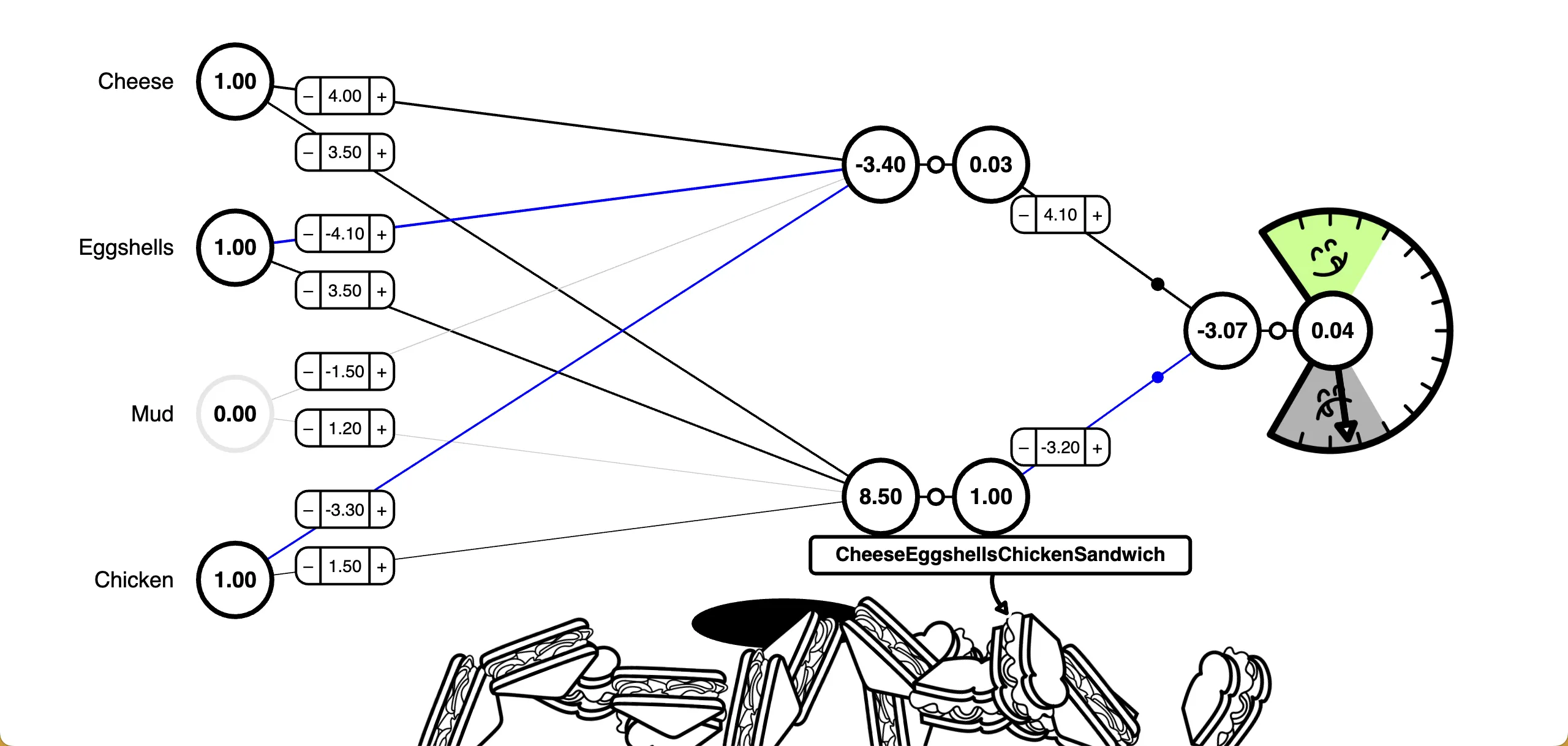

To make these foundational principles tangible, we’ve developed playful teaching tools. SandwichNet visualizes neural networks through training a sandwich classifier, while Acting out AI Systems has students physically role-play AI components to understand system functionality and limitations from the inside out.

A screenshot of the SandwichNet application.

A screenshot of the SandwichNet application.

Acting out AI Systems: Students acting as human sensors and recording a data stream of a thermal camera on paper.

Acting out AI Systems: Students acting as human sensors and recording a data stream of a thermal camera on paper.

2. Fostering Hands-on Exploration

The complexity and non-deterministic nature of AI makes it challenging to approach as design material. Traditional creative processes rely on what Donald Schön called “reflection-in-action”—ideation that unfolds through direct engagement with materials. To enable this with AI, we prioritize hands-on engagement and a learning-through-making mindset.

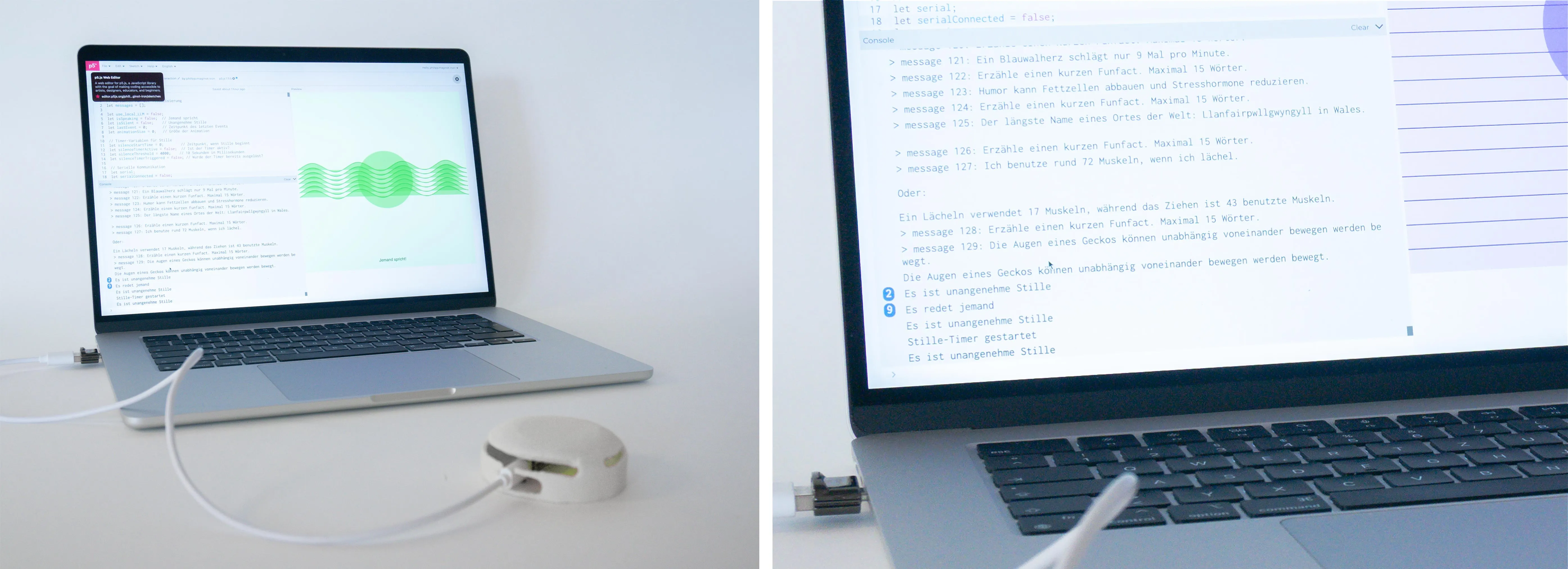

One focus area is Physical AI—machine learning on microcontrollers that interact through sensors and actuators. Their limited computing power makes models small and functionality easier to grasp. Using Edge Impulse and Arduino boards, students experience the full pipeline from data collection to deployment. Reusable code elements for sensing and acting allow students to quickly turn concepts into interactive prototypes.

In the field of generative AI, we provide students with accessible tools by hosting a Stable Diffusion service and open source language models on our project server infrastructure. Custom-built tools like Transferscope—both a physical Raspberry Pi device and web platform—encourage playful engagement with image generation. The tool lets users capture the style of one object and transfer it to another with a one-button interface.

Physical AI Prototype: Sound-based snake game controller by Ron Eros Mandic and Lukas Speidel.

Physical AI Prototype: Sound-based snake game controller by Ron Eros Mandic and Lukas Speidel.

Transferscope. Physical device (left) and image generation process (right).

Project by Christopher Pietsch.

Transferscope. Physical device (left) and image generation process (right).

Project by Christopher Pietsch.

3. Enabling Conceptual Engagement

Developing meaningful AI applications poses a particular challenge for designers. To support ideation, we’ve created methods that foster creative friction and encourage unconventional thinking.

Our imagination cards—inspired by movements like Fluxus and Oulipo—provide random constraints that encourage creative exploration. The Input/Output cards, for example, combine sensor technologies with output media, prompting students to consider both technical and conceptual possibilities.

We explore multiple approaches to AI interaction design. Metaphors serve as design resources, as in the “Caring for Machines” workshop that reimagines human-AI relationships through care. Experimental methods like thing ethnography and object personas encourage students to adopt technological perspectives, revealing unique affordances without deep technical knowledge.

The Futures Lenses course format uses generative AI as a co-designer for creating speculative scenarios and fictional design objects. By deliberately applying constraints, ambiguity, or exaggeration in prompts, the course provokes errors and unexpected results that become sources for both critical and creative thinking—helping students defamiliarize everyday objects, expose biases, and gain inspiration through iterative exploration.

Caring for Machines: Social Spark detects awkward silences in conversations and fills them with random facts or jokes. Project by Philipp Maginot and Sebastian Weinert.

Caring for Machines: Social Spark detects awkward silences in conversations and fills them with random facts or jokes. Project by Philipp Maginot and Sebastian Weinert.

Experimental methods: Students role-playing a fitness tracker (left) and a digital companion (right).

Experimental methods: Students role-playing a fitness tracker (left) and a digital companion (right).

Futures Lenses: A speculative concept (left) and a catalog of fictional things (right), showing a lamp for promoting well-being through symbiotic, reciprocal interactions. Project by Anja Gutmann, Lea Haferbier and Carolin Kaltwasser

Futures Lenses: A speculative concept (left) and a catalog of fictional things (right), showing a lamp for promoting well-being through symbiotic, reciprocal interactions. Project by Anja Gutmann, Lea Haferbier and Carolin Kaltwasser

4. Encouraging Critical Reflection

Critical examination of AI’s societal, ethical, and ecological implications forms the fourth pillar. This happens through both theoretical discussion and hands-on engagement that sparks reflection on emerging phenomena—from biases in training datasets to environmental costs of computation, from the evolving role of designers to questions of responsibility in shaping these technologies.

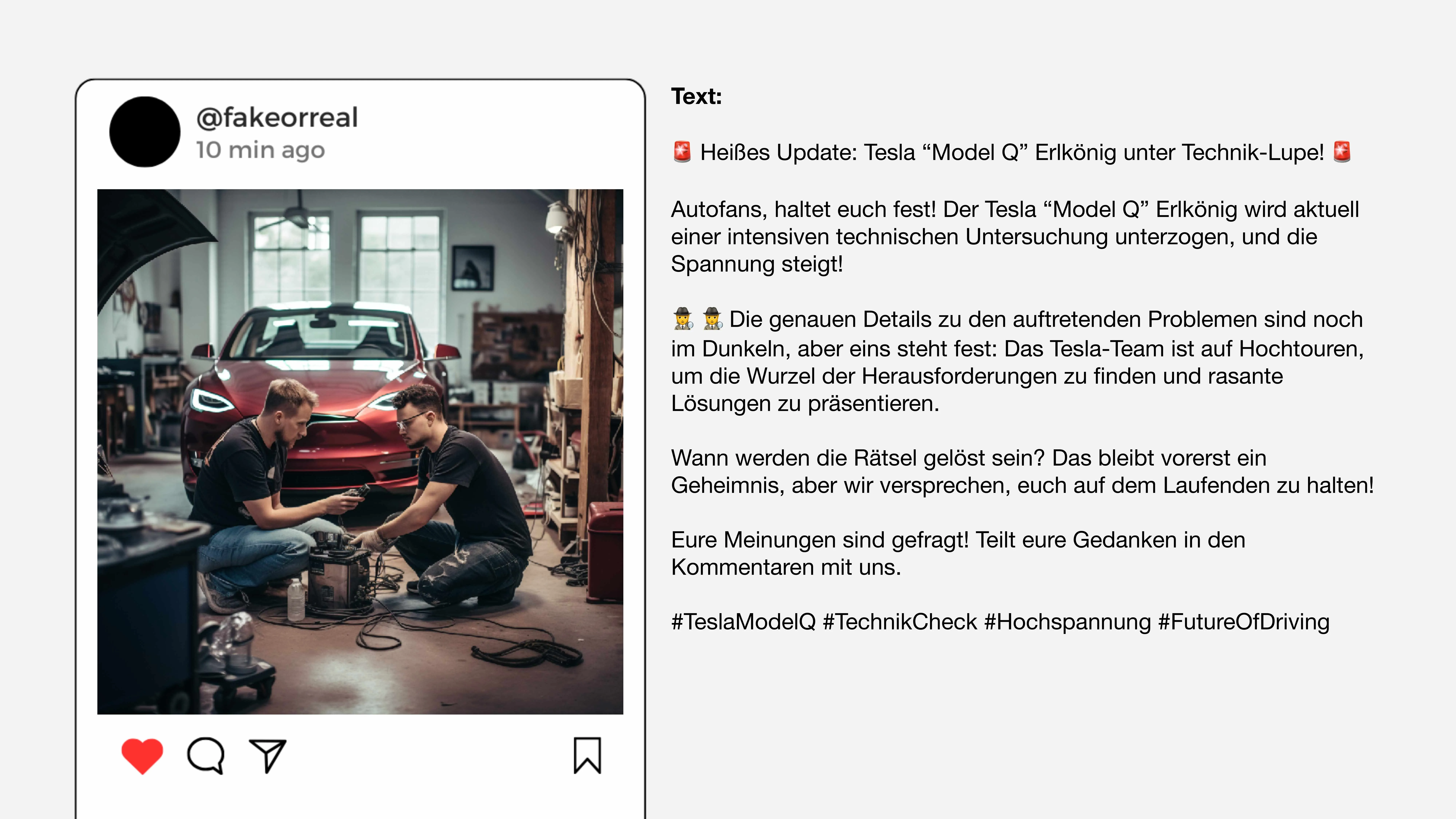

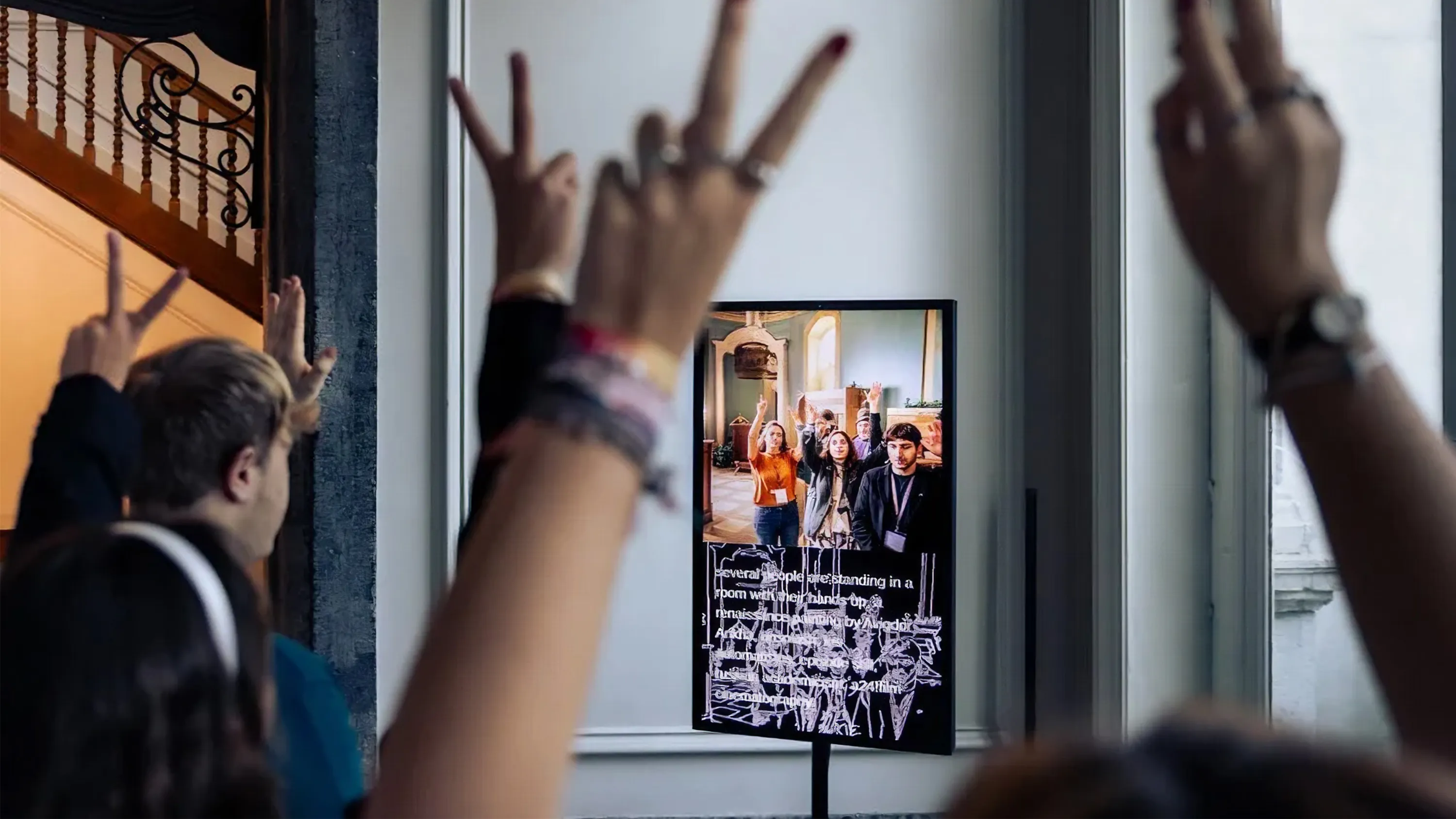

Formats like Fake or Real (co-developed by AI+Design Lab and Lisa Kern) challenge students to create and then identify convincing but fabricated social media content, revealing how easily misinformation can be produced and what indicators might suggest inauthenticity. The unStable Mirror installation makes AI biases tangible by highlighting how generative systems reflect and amplify existing societal patterns.

Fake or Real: Fictional social media content. Students had to work out whether the content was fake or real.

Fake or Real: Fictional social media content. Students had to work out whether the content was fake or real.

unStable Mirror exhibited at KIKK Festival in Namur. Photo by Quentin Chevrier.

Project by Christopher Pietsch.

unStable Mirror exhibited at KIKK Festival in Namur. Photo by Quentin Chevrier.

Project by Christopher Pietsch.

Moreover, exploring technological futures with AI offers another strategy for critical reflection. The Futures Lenses format doesn’t just support the exploration of novel concepts; it also exposes stereotypical future visions embedded in and reproduced by generative AI systems. Recognizing and questioning these mainstream narratives becomes a key skill as AI becomes more prevalent in design ideation.

Lessons from Three Years of Practice

Implementing these pillars across various formats—from compact workshops to extended project courses—has yielded valuable insights.

Sequential formats matter. Balancing technical depth and conceptual engagement in teaching proved challenging. This points to the need for consecutive formats that start with foundational technical knowledge, deepen it through hands-on engagement, then move to advanced conceptual exploration and critical reflection.

Different stages need different tools. Simple tools support early exploration and unexpected discoveries, while complex tools enable refinement and precise control as projects mature.

Co-creation requires openness and letting go. Teaching AI intuition requires shifting students’ mindset: they must learn to embrace AI as a co-creator and agent of surprise rather than pursuing the “perfect prompt.”

AI-generated concepts enable critical distance. While students often replicate stereotypical AI narratives in preliminary ideas, seeing them echoed in AI-generated outputs provides critical distance—AI’s outputs become a mirror for examining mainstream metaphors and future narratives.

Experimental methods unlock new perspectives. Object-centered and performative approaches enabled students to break away from solution-oriented thinking, encouraging in-depth discussion of ethical dimensions and designers’ responsibility in shaping these technologies.

Looking Forward

As AI technologies evolve, our teaching approaches must evolve with them. What remains constant is our commitment to AI Intuition—a holistic understanding that enables designers to engage meaningfully with AI, make informed decisions, and critically evaluate implications.

The tools, methods, and insights described represent three years of collaborative development by the AI+Design Lab team. We offer them as starting points for others navigating similar territory in design education, and we remain committed to sharing our approaches and learning from the community as the field continues to develop.

References:

[1] Flechtner, R., & Tost, J. (2025). Building AI intuition – Four educational pillars for teaching AI in design at AI+D Lab. In M. Grund, J. T. Wallenborn, L. Scherffig, & F. Jenett (Eds.), un/learn ai: Integrating AI in aesthetic practices (Vol. 3, pp. [30-42]). Hochschule Mainz. https://doi.org/10.25358/openscience-13021

[2] Flechtner, R., & Stankowski, A. (2023). AI Is Not a Wildcard: Challenges for Integrating AI into the Design Curriculum. Proceedings of the 5th Annual Symposium on HCI Education, 72–77. https://doi.org/10.1145/3587399.3587410