Transferscope — Synthesized Reality: Sample anything. Transform everything

Transferscope is a working prototype that merges human creativity with artificial intelligence, resulting in a handheld device that transforms our physical world through AI. The device allows users to capture an object or concept with a simple click and seamlessly blend it onto any scene, creating new and imaginative realities.

With its single-button interface and clear screen feedback, users can swiftly capture and transform images, allowing them to focus on their creativity and experimentation. This simple design ensures that the technology seamlessly supports the process without causing any distractions or complications. The device is lightweight and portable, making it easy to carry and use in various environments.

A Maker project

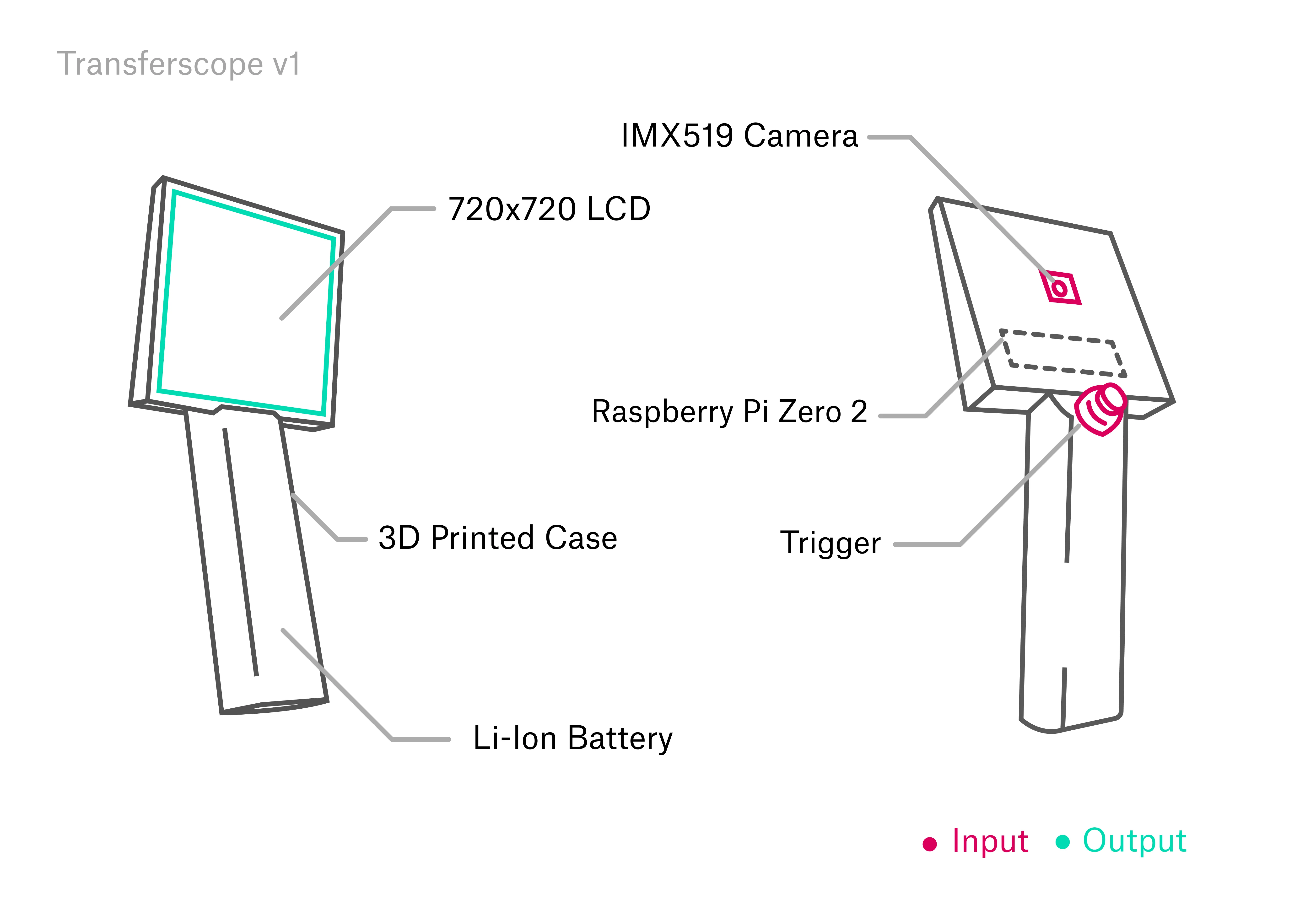

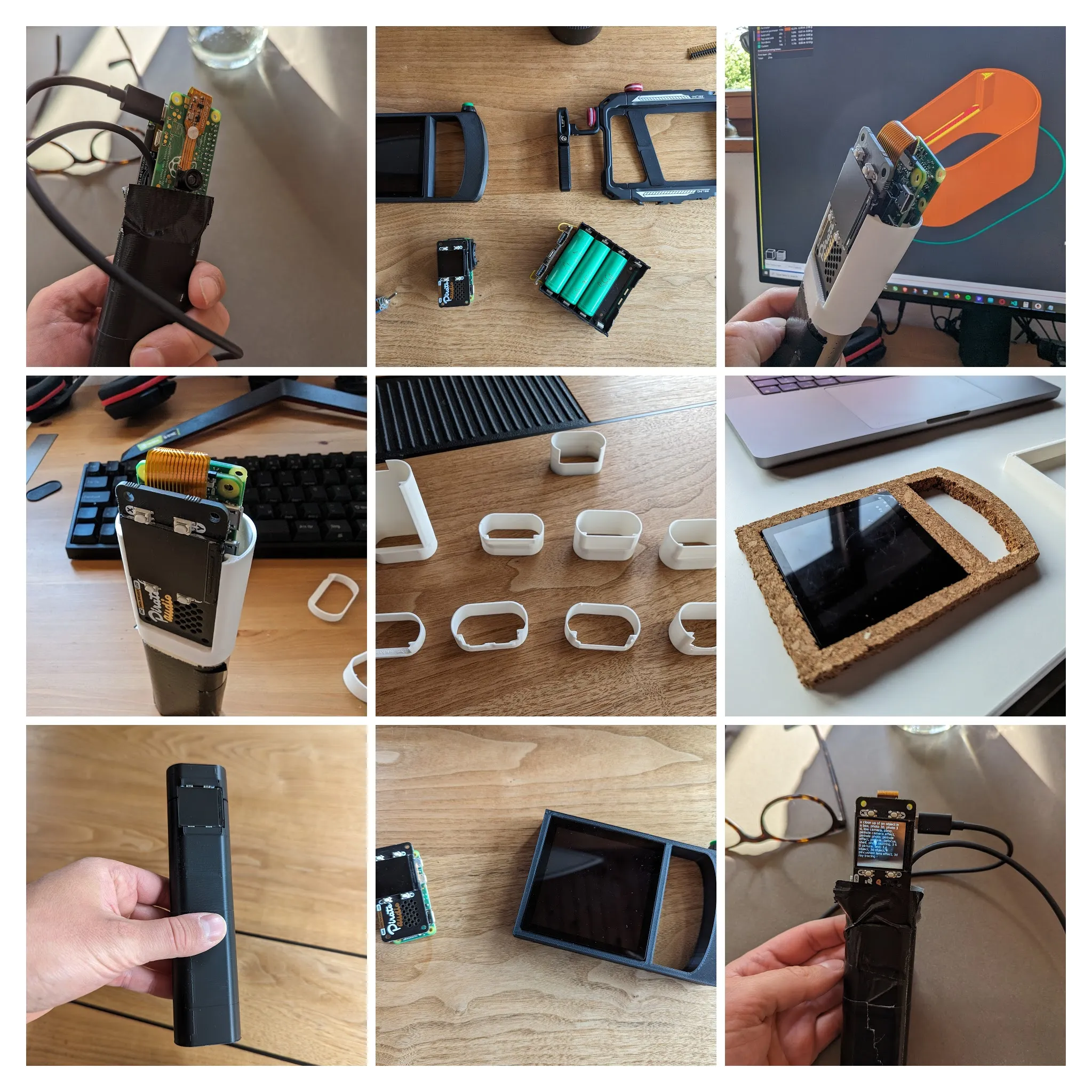

Transferscope’s handheld device is powered by a Raspberry Pi Zero 2, encased in a custom 3D-printed housing. It features a 720x720 pixels resolution screen (HyperPixel) and integrated Li-Ion batteries (2x 18650). The device runs on Debian with a custom Python script for image capture and processing (PyGame, OpenCV, Asyncio, libcamera). It handles an edge detection algorithm directly on the device, which it sends with additional data to a server (RTX 4080, Intel i7-12700K). There the interpretation of the image, and generation of the output is processed.

The server runs a optimized Pipeline that links two central functionalities: Stable Diffusion for image generation and an image interpreter called Kosmos 2 by Microsoft. The image is first interpreted, then sent to an IPAdapter which synthesizes contextual and visual information into an entirely new image. The module used to steer the image generation towards the input geometry is a ControlNet. This setup allows Transferscope to transform captured images into new textures and patterns and apply them to a second scene almost instantly (<1s), creating a seamless experience for the user.

AI as a collaborator

Transferscope is more than just a technological demonstration. It offers a new way to view and interact with the world. By allowing users to overlay textures and patterns onto various objects, it encourages a rethinking of everyday environments and objects. This capability can be used for artistic expression, educational purposes, or simply to explore new perspectives. My vision for Transferscope is to capture the essence of today’s generative image AI, utilizing open-source tools and models, marking the current state of the technology. It showcases the shift from genuine photography to synthetic image creation. Situating the project at the boundary between reality and AI generated content that is infiltrating our daily lives.

While the tech behind the device is complex, the user experience is simple and intuitive. This balance between advanced technology and user-friendly design is what makes it fun and engaging to use. It’s not just technology deciding the result: the user’s guidance directs the transformation. This is a example of a collaboration between human and machine that opens up new possibilities for creativity and exploration.

Process

3D printed backplate with mesh structure for better heat dissipation

3D printed backplate with mesh structure for better heat dissipation

The first iteration consisted of two devices: one for sampling and one for transfering

The first iteration consisted of two devices: one for sampling and one for transfering

Finding shape and structure for Transferscope

Finding shape and structure for Transferscope

Transferscope in shipping case and hardware components

Transferscope in shipping case and hardware components

Christopher turning into a cup

Christopher turning into a cup

Creating transferscope was a iterative process, exploring the technology and form of the device. Multiple prototypes were 3D printed, tested and refined. The project is related to the unStable Mirror, a previous project that explored similar themes. It evolved from two devices to one, simplifying the user experience and enhancing the tool’s character. While working on Transferscope new advancements in AI and image synthesis were made, making it a living project that evolves with the technology.

Credits

- Concept, Idea and Production: Christopher Pietsch

- Extended Team: Benedikt Groß, Aeneas Stankowski, Felix Sewing, Rahel Flechtner

- Illustration: Stamatia Galanis

- Generative Voice: ElevenLabs